In this article, we'll explore how four leading AI platforms - OpenAI, Groq, Gemini, and Mistral handle JSON formatting. This knowledge is key to getting clean, structured data from AI responses.

Why is this important? JSON is one of the most widely used formats for data exchange between applications. With Structured Outputs, you can ensure AI responses always follow your specified JSON Schema, avoiding issues like missing keys or invalid values.

Whether you're a seasoned developer or just starting with AI integration, this guide will help you master JSON in AI platforms, making your applications more reliable and efficient.

JSON, or JavaScript Object Notation, is like a universal language for data. Imagine it as a way to organize information in a format that both humans and computers can easily read. Here's why it's becoming a big deal in AI:

AI platforms are embracing JSON because:

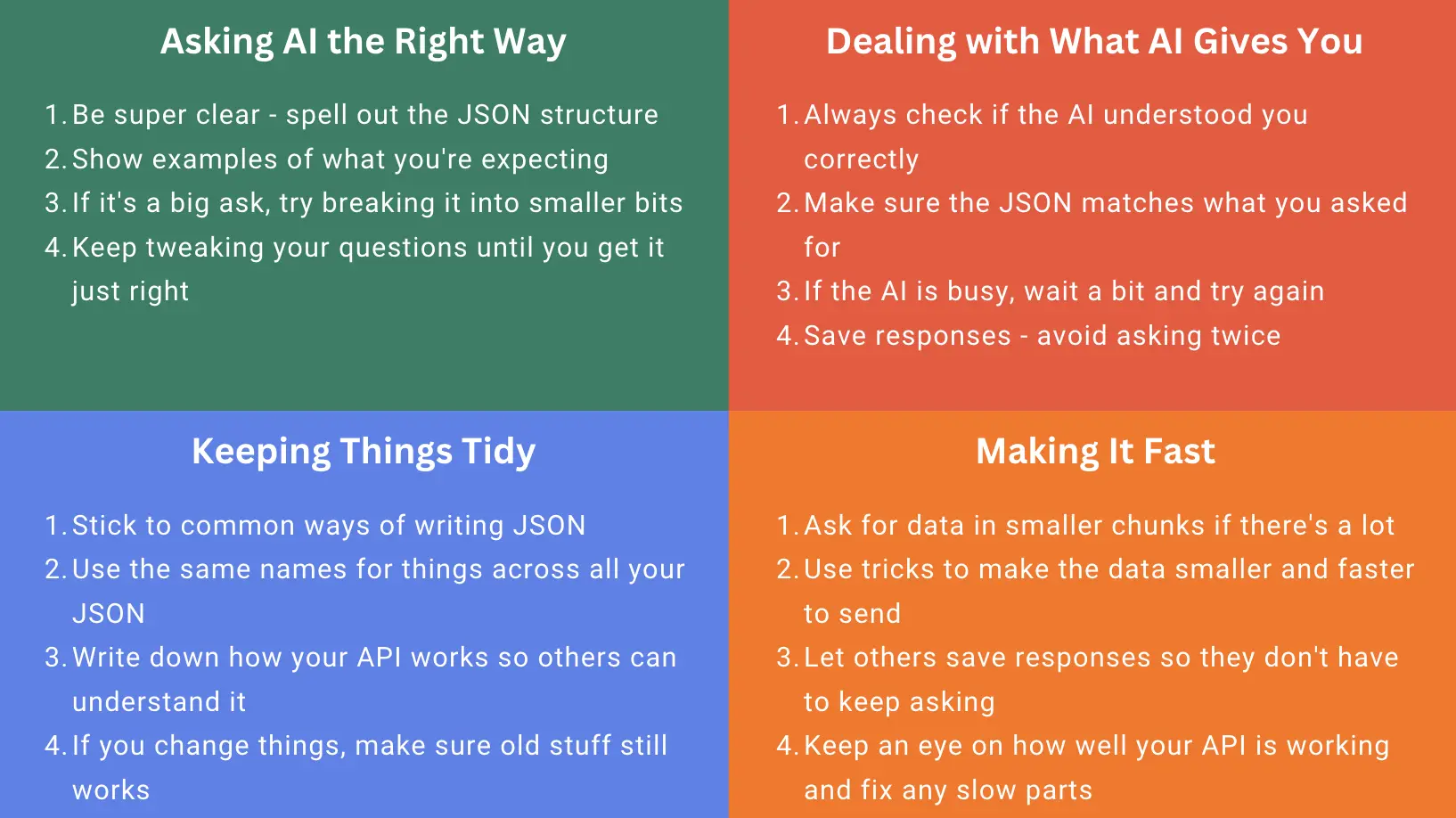

When you're trying to get JSON data from AI APIs, there are some tricks that can make your life a whole lot easier. By following these tips, you'll be a pro at getting clean, useful JSON from AI in no time!

OpenAI offers two powerful methods for generating structured JSON responses: JSON mode and the more advanced Structured Outputs feature. While JSON mode ensures responses are in a JSON format, Structured Outputs takes it a step further by guaranteeing adherence to a specific JSON schema. This newer feature, available on the latest models like GPT-4 and its variants, provides developers with precise control over the structure of AI-generated content. By using Structured Outputs, you can define exact schemas for your desired JSON responses, significantly reducing the need for post-processing and validation.

Example in Python:

Example in Javascript:

Important Notes:

By using Structured Outputs, you can ensure that your AI responses are always in the format you need, making your applications more robust and easier to develop.

Groq offers a "JSON mode" that ensures all chat completions are valid JSON. Here's how you can use it effectively:

Important Notes:

By using JSON mode, you can ensure that your Groq API responses are always in a valid JSON format, making it easier to integrate AI-generated content into your applications.

Google's Gemini API offers powerful capabilities for generating structured JSON outputs, which are ideal for applications requiring standardized data formats.

Best Practices:

Mistral offers a straightforward approach to generating structured JSON outputs, making it ideal for applications requiring standardized data formats.

Step-by-Step Guide with Code Example:

import osfrom mistralai import Mistral# Set up API key and modelapi_key = os.environ["MISTRAL_API_KEY"]model = "mistral-large-latest"# Initialize Mistral clientclient = Mistral(api_key=api_key)# Define the message requesting JSON outputmessages = [ { "role": "user", "content": "What is the best French meal? Return the name and ingredients in a short JSON object." }]# Request chat completion with JSON formatchat_response = client.chat.complete( model=model, messages=messages, response_format={"type": "json_object"})# Print the JSON responseprint(chat_response.choices[0].message.content)Expected Output:

When it comes to generating structured JSON outputs, each AI platform has its own approach. Here's a comparison of OpenAI, Groq, Gemini, and Mistral:

|

OpenAI |

Groq |

Gemini |

Mistral |

|

● Precise schema control ● Type safety and explicit refusals ● More complex setup

|

● Simple JSON mode activation ● Pretty-printed JSON support ● Limited to specific models (Mixtral > Gemma > Llama) |

● Flexible schema definition (type hints or protobuf) ● Works with both Flash and Pro models ● May require more prompt engineering for consistency |

● Straightforward implementation ● Available for all Mistral models ● Requires explicit JSON requests in prompts |

When selecting a platform, consider your specific needs for schema complexity, ease of implementation, and the level of control required over the JSON output. Always test the outputs across different platforms to ensure they meet your application's requirements for structure, consistency, and accuracy.

As we've explored, AI-powered JSON generation is changing how developers interact with and leverage AI models. From OpenAI's structured outputs to Groq's JSON mode, Gemini's flexible schemas, and Mistral's straightforward approach, each platform offers unique capabilities for creating structured data.

Looking ahead, we can expect even more sophisticated JSON generation techniques, including:

● Enhanced schema validation and error handling

● More intuitive ways to define complex, nested structures

● Improved consistency and reliability in generated outputs

● Integration with data validation and transformation pipelines

The future of AI API responses lies in providing developers with greater control, flexibility, and efficiency in working with structured data. As these technologies evolve, they will enable a more seamless integration of AI capabilities into a wide range of applications and services.

We encourage you to experiment with these JSON generation techniques across different AI platforms. By doing so, you'll not only enhance your applications but also contribute to the ongoing evolution of AI-powered data structuring. After obtaining your JSON, you can leverage it for data integrations or create documents by converting JSON to Word or JSON to PDF formats.